Note: this post contains my notes on the video below - "How do I store data in Windows Azure tables?". I thought these notes may be useful for any other Microsoft .NET architects or developers out there. If you are not involved with software engineering, you'll find this really boring.

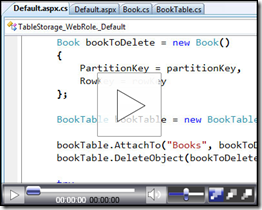

Microsoft Developer Network (MSDN) video:

How do I store data in Windows Azure tables?

A couple of days ago, I found the following video was published on MSDN:

How Do I: Store Data in Windows Azure Tables? (15 minutes, 37 seconds) http://msdn.microsoft.com/en-us/azure/dd483299.aspx

You can download the video in the page above, multiple formats are available. You can also directly click here to download the 34.5 MB WMV video to your local machine (right-click and choose "Save As", Windows Media Player required).

The first few minutes of the video provide a lot of good information - here are some of the notes I took today while watching the video:

- From what I understand the current implementation of Windows Azure Storage occurs through an HTTP based REST style interface.

- That said, in the Windows Azure SDK Microsoft gives us another alternative: a managed set of classes that wrap the HTTP calls to Azure storage. You'll find these in the 'Samples' directory of the Windows Azure SDK, add a reference to "StorageClient.dll" (you can then use Microsoft.Samples.ServiceHosting.StorageClient). You also need a reference to System.Data.Storage.Client.

- In Windows Azure tables, the basic unit of storage is called an Entity, so for each type that you want to store in a table you create a class that derives from TableStorageEntity (present in Microsoft.Samples.ServiceHosting.StorageClient).

- TableStorageEntity provides two properties: PartitionKey and RowKey. These are the only two properties that Azure storage requires for entities and the only two properties that it really cares about.

- PartitionKey is used by Azure Storage if it needs to distribute the table entities over multiple storage nodes. For example, if you have a table of books, your PartitionKey could be the "author" property; this way books from the same author will be stored in the same Azure storage node, so our queries would be more efficient. The video above mentions that another video will have more information on table partitions.

- RowKey represents the unique id of the entity within the partition it belongs to. For the book table example, the rowkey was set to the book's title - so each book could be indetified by it's PartitionKey (author) and RowKey (title).

- When you deploy your application to Windows Azure, your data is ultimately stored in Azure Storage. But you can create a local "Storage Table Class" to interact with programatically - for this you can create another wrapper class that derives from Microsoft.Samples.ServiceHosting.StorageClient.TableStorageDataServiceContext (in the example they call this class BookTable)

- Within TableStorageDataServiceContext there's a method to load the configuration (so you don't hard code values), StorageAccountInfo.GetDefaultTableStorageAccountFromConfiguration(). You need to add the configuration settings to a couple of services xml configuration files.

- AccountName, AccountSharedKey and TableStorageEndPoint are defined in the XML file ServiceDefintion.csdef

- The actual values for AccountName, AccountSharedKey and TableStorageEndPoint are defined in in the XML file ServiceConfiguration.cscfg

- While in development, you can use the local storage development account, so your TableStorageEndPointis something like "http://127.0.0.1:10002".

- There's a "Development Storage" desktop app that allows you to check if your local TableStorage is running (and start it if needed).

- Going back to our BookTable class (the one that derives from TableStorageDataServiceContext), we can now create a method to perform a query against our Azure table storage that returns all entities that match a given type. In the example this was a "DataServiceQuery<Book> Books()"

There's a lot more info on the video, including a way to create the table in Azure storage on a first request, based on the classes discussed above. In the video, they mention that this was written for ASP.NET users, since each HTTP call to an ASPX page generates an new instance of the page class. But I found it interesting that there's a mechanism to create the storage tables in Azure based on our classes - and only once!

I copy below a thread I started on the .netTiers forums, requesting a Windows Azure layer for .netTiers and a possible solution to implement it.

If you are a Microsoft .NET developer and you use SQL Server, you should check out .netTiers - it's simply amazing. For more information on .netTiers, check out:

.netTiers Application Framework

http://nettiers.com/

A few days ago I started a thread on the .netTiers forums:

.netTiers and Windows Azure?

http://community.codesmithtools.com/forums/p/9327/34687.aspx

Below I publish an ongoing conversation with Jeff from the .netTiers team - it would be great if .netTiers worked with Azure!

14 Posts, Points 420

![]() ehuna posted on 02-08-2009 10:49 PM

ehuna posted on 02-08-2009 10:49 PM

Just curious if anyone from the .netTiers team has tried out a test app on Windows Azure -

http://www.microsoft.com/azure/default.mspx

I have a current application that uses .netTiers and would love to be able to host it on Windows Azure. Ideally I'd like to use the same code in my presentation layer while .netTiers magically makes it work with the Windows Azure data access layer.

Would that be possible with the current .netTiers version?

If not, what would it take to make it work?

Thanks,

Emmanuel

www.ehuna.org

![]() SuperJeffe replied on 02-09-2009 9:16 PM, Suggested by blake05

SuperJeffe replied on 02-09-2009 9:16 PM, Suggested by blake05

I am not an azure expert....I know next to nothing about it.

Great question. Nettiers requires relational model that Codesmith can read to generate code. Not sure how SDS would translate into that world. Now, if you can design a model in Sql Server, host that in Azure somehow, then maybe.

Feel free to continue this discussion...it would be really sweet to get it working.

jeff

----------------------------------------------------------------------

Member of the .NetTiers team | Visit http://www.nettiers.com

----------------------------------------------------------------------

![]() ehuna replied on 02-14-2009 2:56 PM

ehuna replied on 02-14-2009 2:56 PM

Thanks Jeff - here's a summary of what I found out that I hope can help. A couple of days ago, I found the following video was published on MSDN:

How Do I: Store Data in Windows Azure Tables?

http://msdn.microsoft.com/en-us/azure/dd483299.aspxThe first few minutes of the video provide a lot of good information and I think a potential solution for making .netTiers work with Windows Azure. Here are some of my notes from the video:

- From what I understand the current implementation of Windows Azure Storage occurs through an HTTP based REST style interface.

- That said, in the Windows Azure SDK Microsoft gives us another alternative: a managed set of classes that wrap the HTTP calls to Azure storage. You'll find these in the 'Samples' directory of the Windows Azure SDK, add a reference to "StorageClient.dll" (you can then use Microsoft.Samples.ServiceHosting.StorageClient). You also need a reference to System.Data.Storage.Client.

- In Windows Azure tables, the basic unit of storage is called an Entity, so for each type that you want to store in a table you create a class that derives from TableStorageEntity (present in Microsoft.Samples.ServiceHosting.StorageClient).

- TableStorageEntity provides two properties: PartitionKey and RowKey. These are the only two properties that Azure storage requires for entities and the only two properties that it really cares about.

- PartitionKey is used by Azure Storage if it needs to distribute the table entities over multiple storage nodes. For example, if you have a table of books, your PartitionKey could be the "author" property; this way books from the same author will be stored in the same Azure storage node, so our queries would be more efficient. The video above mentions that another video will have more information on table partitions.

- RowKey represents the unique id of the entity within the partition it belongs to. For the book table example, the rowkey was set to the book's title - so each book could be indetified by it's PartitionKey (author) and RowKey (title).

- When you deploy your application to Windows Azure, your data is ultimately stored in Azure Storage. But you can create a local "Storage Table Class" to interact with programatically - for this you can create another wrapper class that derives from Microsoft.Samples.ServiceHosting.StorageClient.TableStorageDataServiceContext (in the example they call this class BookTable)

- Within TableStorageDataServiceContext there's a method to load the configuration (so you don't hard code values), StorageAccountInfo.GetDefaultTableStorageAccountFromConfiguration(). You need to add the configuration settings to a couple of services xml configuration files.

- AccountName, AccountSharedKey and TableStorageEndPoint are defined in the XML file ServiceDefintion.csdef

- The actual values for AccountName, AccountSharedKey and TableStorageEndPoint are defined in in the XML file ServiceConfiguration.cscfg

- While in development, you can use the local storage development account, so your TableStorageEndPointis something like "http://127.0.0.1:10002".

- There's a "Development Storage" desktop app that allows you to check if your local TableStorage is running (and start it if needed).

- Going back to our BookTable class (the one that derives from TableStorageDataServiceContext), we can now create a method to perform a query against our Azure table storage that returns all entities that match a given type. In the example this was a "DataServiceQuery<Book> Books()"

There's a lot more info on the video, including a way to create the table in Azure storage on a first request, based on the classes discussed above. In the video, they mention that this was written for ASP.NET users, since each HTTP call to an ASPX page generates an new instance of the page class. But I found it interesting that there's a mechanism to create the storage tables in Azure based on our classes - and only once!

With the above in mind, here's an idea on how .netTiers could work with Azure storage:

- We define our schema in our local SQL Server, just like we do today.

- We run CodeSmith and load up a future version of .netTiers that has support for Azure Storage integration.

- The .netTiers templates would retrieve the schema for SQL (like today), but would create the classes discussed above. Parameters could be specified to generate the correct XML configuration files as well.

- In the .netTiers configuration you would specify if you are using SQL Server or Azure Storage.

- There would be a mechanism to detect on first run that the tables need to be created in Azure Storage (see video).

We then end up with the same tables we had in SQL, created in Azure Storage (locally or in the cloud!). We can use our standard calls to .netTiers that we all love to manage our data.

This would be great not only for new projects, but if it works it would mean that existing projects could continue using the same code - instead of saving to SQL Server, the new .netTiers wrapper classes would write to Azure Storage.

What do you think?

-------------------------------------------------------------------------

Hopefully, more to come. Keep tuned in.

Comments